Genesis of the WaymoPulseSF project

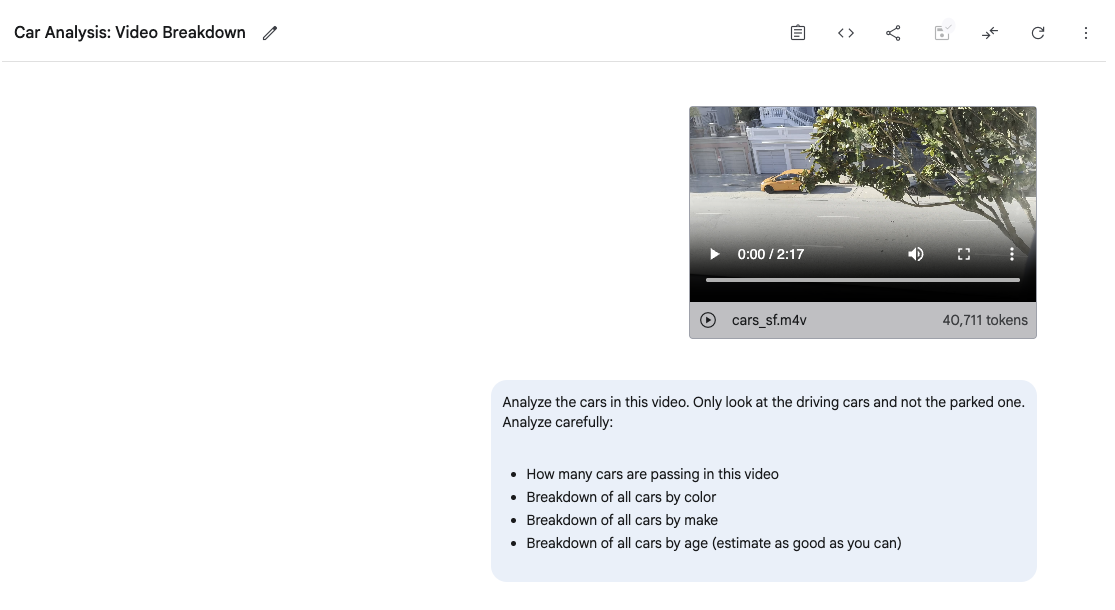

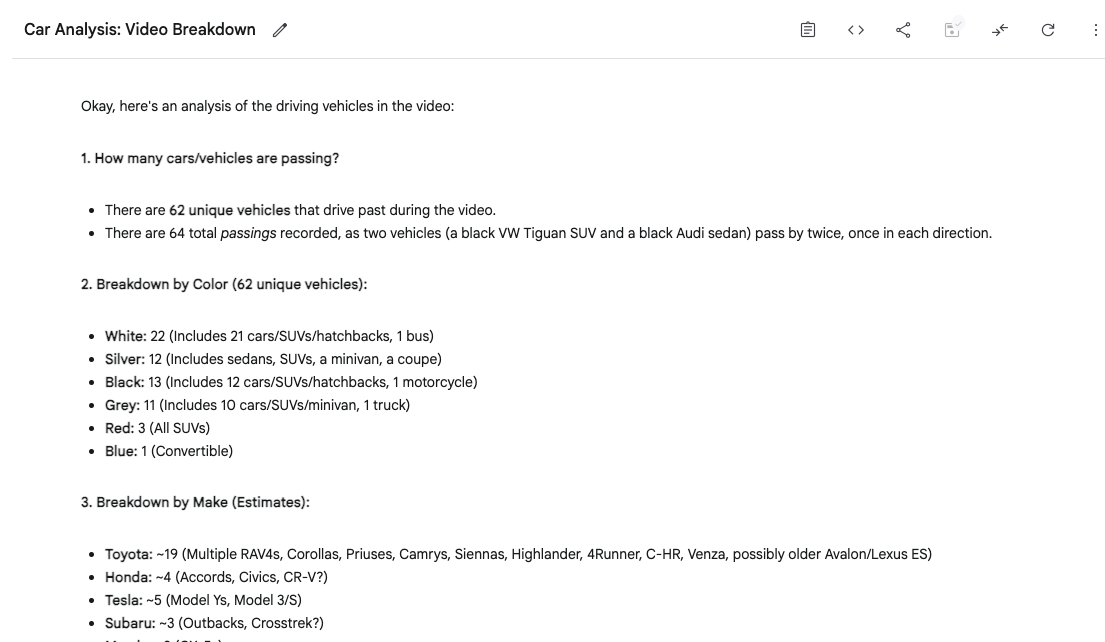

I was inspired by Alessio (from Latent Space) over a coffee to dive deeper into video analysis and model capabilities. I wanted to determine how effective Gemini 2.5 Pro is for this purpose. To test this, I filmed a short (~2 minute) clip out of my window and used Gemini in the AI studio to run a basic analysis of the cars that passed by. The analysis focused on identifying the car colors, makes, and approximate ages. Despite the somewhat poor video quality (due to obstructions like a tree), the analysis was quite accurate, with results within plus or minus 10-15% of reality (for overall detection count - for actual timestamps accuracy about 80%). This level of accuracy is comparable to what a human would achieve without meticulous multi-verification was quite mind blowing.

Practical use-case exploration

To explore a practical use case, I decided to count the number of Waymo vehicles and their percentage of total vehicles at my specific location in the Lower Pacific Heights neighborhood of San Francisco. I purchased a Raspberry Pi 5 and a Camera module on Saturday and set them up with the help of Gemini 2.5 Pro in approximately 2 hours on Sunday morning. Then ~9am I began recording looped 5-minute clips to feed to Gemini for analysis.

Although the setup was very patchy and not well-mounted, as shown in the pictures, I achieved my goal of having it all working within 24 hours, before the end of Sunday.

Initial Experiment in Google AI Studio

Screenshot from the Video (with a Waymo sighting) - quality and mounting obviously not super great - but even than Gemini can analyse it well

Prompting the analysis into existence

I then (with the help of Gemini) created a set of prompts (see below) and ran them across the recorded 5 minute files, and had a simple script aggregate it all.

System Prompt:

You are an expert vehicle analysis system specializing in identifying, counting, and categorizing vehicles in video content.

Your analysis is precise, methodical, and presented in structured formats.

You can distinguish between different vehicle makes, estimate vehicle ages, and identify special vehicle types such as autonomous vehicles.

You always ignore parked vehicles and focus only on those in motion. Your output is comprehensive, accurate, and formatted according to specific JSON schemas.

Prompt:

I need you to analyze all vehicles in motion in a video starting at {$start_time}. Examine only the vehicles that are driving/moving (not parked vehicles).

Provide the following analysis:

1. Count all cars passing in this video

2. Create a detailed breakdown of all cars by color (with counts for each color)

3. Create a detailed breakdown of all cars by make (with counts for each manufacturer)

4. Estimate the age of each car and categorize them as:

- New: 0-5 years old

- Recent: 5-10 years old

- Old: 10+ years old

5. Identify all Waymo autonomous vehicles with timestamps when they appear in the video

6. Calculate what percentage of total cars are Waymo vehicles

7. Count all motorbikes with timestamps when they appear in the video

8. Calculate what percentage of total vehicles (cars + motorbikes) are motorbikes

For each vehicle, note:

- Timestamp of appearance (relative to the start of the video in the format MM:SS)

- Color (e.g. red, blue, etc. - use lower case)

- Make (manufacturer) (e.g. Toyota, Honda, etc.) - for Waymo vehicles, use "Waymo" (not Jaguar, only use Jaguar for non Waymo Jaguars). If you cannot determine the make, use "Unknown"

- Estimated age (in years)

- Estimated value (in USD) - for Waymo vehicles, use $150,000

- Brief description of the car and its position in the video / overall analysis

Be methodical and thorough in your analysis. If you cannot determine certain details with confidence, provide your best estimate and indicate uncertainty.

Present your final output in JSON format that adheres to the following JSON schema: {$json_schema}

JSON schema:

{

"type": "object",

"properties": {

"cars": {

"type": "object",

"properties": {

"total_cars": {"type": "integer"},

"details": {

"type": "array",

"items": {

"type": "object",

"properties": {

"number": {"type": "integer"},

"timestamp": {

"type": "string",

"description": "timestamp of the car in the video - relative to the start of the video in the format MM:SS"

},

"color": {

"type": "string",

"description": "color of the car (e.g. red, blue, etc.)"

},

"make": {

"type": "string",

"description": "make of the car (e.g. Toyota, Honda, etc.)"

},

"estimated_age": {

"type": "integer",

"description": "estimated age of the car in years"

},

"estimated_value": {

"type": "string",

"description": "estimated value of the car in USD"

},

"description": {

"type": "string",

"description": "description of the car and its position in the video / overall analysis"

}

},

"required": [

"number",

"timestamp",

"color",

"make",

"estimated_age",

"estimated_value",

"description"

],

"additionalProperties": false

}

}

},

"required": ["total_cars", "details"],

"additionalProperties": false

},

"cars_by_color": {

"type": "array",

"items": {

"type": "object",

"properties": {

"color": {"type": "string"},

"count": {"type": "integer"}

},

"required": ["color", "count"],

"additionalProperties": false

}

},

"cars_by_age": {

"type": "object",

"properties": {

"new": {"type": "integer", "description": "0-5 years"},

"recent": {"type": "integer", "description": "5-10 years"},

"old": {"type": "integer", "description": "10+ years"}

},

"required": ["new", "recent", "old"],

"additionalProperties": false

},

"cars_by_make": {

"type": "array",

"items": {

"type": "object",

"properties": {

"make": {

"type": "string",

"description": "make of the car (e.g. Toyota, Honda, etc.)"

},

"count": {

"type": "integer",

"description": "number of cars of this make"

}

},

"required": ["make", "count"],

"additionalProperties": false

}

},

"waymo": {

"type": "object",

"properties": {

"count": {"type": "integer"},

"percentage": {"type": "number"},

"timestamps": {

"type": "array",

"items": {"type": "string"}

},

"description": {"type": "string"}

},

"required": ["count", "percentage", "timestamps", "description"],

"additionalProperties": false

},

"motorbikes": {

"type": "object",

"properties": {

"count": {"type": "integer"},

"percentage": {"type": "number"},

"timestamps": {

"type": "array",

"items": {"type": "string"}

},

"description": {"type": "string"}

},

"required": ["count", "percentage", "timestamps", "description"],

"additionalProperties": false

}

},

"required": [

"cars",

"cars_by_color",

"cars_by_age",

"cars_by_make",

"waymo",

"motorbikes"

],

"additionalProperties": false

}

The initial analysis and outcome:

Gemini 2.5 Pro was used to conduct a traffic analysis over a 13-hour period (8 am - 9 pm) on the initial day, which identified 380 Waymo vehicles (3.39% of all traffic). You can see the analysis here if you scroll down

** Initial Experiment notes**

- Gemini 2.5 Pro occasionally double-counted vehicles, however, this did not appear to create a bias in the data as both Waymo and non-Waymo vehicles were equally affected. The overall accuracy rate was above 85%.

- The loop recording script had 5-10 second gaps in between recordings, which likely resulted in a 2-3% undercount of total vehicles. Again, this did not appear to bias the data in terms of percentages total given all vehicles were equally missed.

- There were two gaps (~20 min total) in the recording due to Wifi crashing and the script being attached to an active SSH session. The script has been improved to prevent this issue and I was then running it with the

screencommand in background detached from my ssh session. - The open-ended prompting led to some vehicles being identified as different brands (i.e. Waymo, Jaguar, or Waymo/Jaguar). Future experiments will include stricter identification parameters.

- The total cost of the experiment was $17.11 (~$1.30/hour), which is extremely cost-effective for a pilot.

What impressed me is that now I can achieve such an analysis in maybe 6 hours total time I spend on this (and ~2 hours of this was setting up the Raspberry). In the past with classical ML it would have taken days of work and fine-tuning of models, now an initial analysis is available at 100x less cost, and the Gemini 2.5 Pro model could easily be used to further fine-tune customized models.

Fun Detour: Spotting Dogs

While sharing the project with my wife, Lina, she playfully asked if the system could spot the cute dogs passing by our Airbnb. Intrigued, I made a quick adjustment to the prompt. Voilà! The system could now identify pedestrians with dogs, accurately pinpointing our furry neighbors.

Added to the prompt and the JSON validation schema:

Identify all pedestrians, noting their start/end timestamps, whether they have a dog, and a brief description of their activity (and dog if present).

"pedestrians": {

"type": "object",

"properties": {

"count": {

"type": "integer",

"description": "number of pedestrians in the video"

},

"details": {

"type": "array",

"items": {

"type": "object",

"properties": {

"number": {

"type": "integer",

"description": "number of the pedestrian in the video (e.g. '1') - this is the order of appearence relative to other pedestrians"

},

"timestamp_start": {

"type": "integer",

"description": "timestamp of the pedestrian in the video first seen - relative to the start of the video in seconds"

},

"timestamp_end": {

"type": "integer",

"description": "timestamp of the pedestrian in the video last seen - relative to the start of the video in seconds"

},

"has_dog": {

"type": "boolean",

"description": "Indicates if the pedestrian has a dog"

},

"description": {

"type": "string",

"description": "What is the pedestrian doing, and if he has a dog describe the dog."

}

},

"required": [

"number",

"timestamp_start",

"timestamp_end",

"has_dog",

"description"

],

"additionalProperties": false

}

}

},

"required": ["count", "details"],

"additionalProperties": false

}

This little experiment highlights the remarkable flexibility of LLMs for rapid prototyping. Accomplishing this with traditional ML methods would have required creating entirely new annotated datasets—a task demanding days, if not weeks, of effort. In contrast, adapting the LLM took just a few minutes with minimal code changes, demonstrating the power of this approach for quickly exploring new ideas.

The other cool thing, the model also picked up even the brand-new Zeekr Waymo robotaxi - which with any custom model I'd likely have missed due to different design / look. I also picked up tons of other robotaxis (mistakenly sometimes identified as a Waymo)

Further refinements and prompt hacks

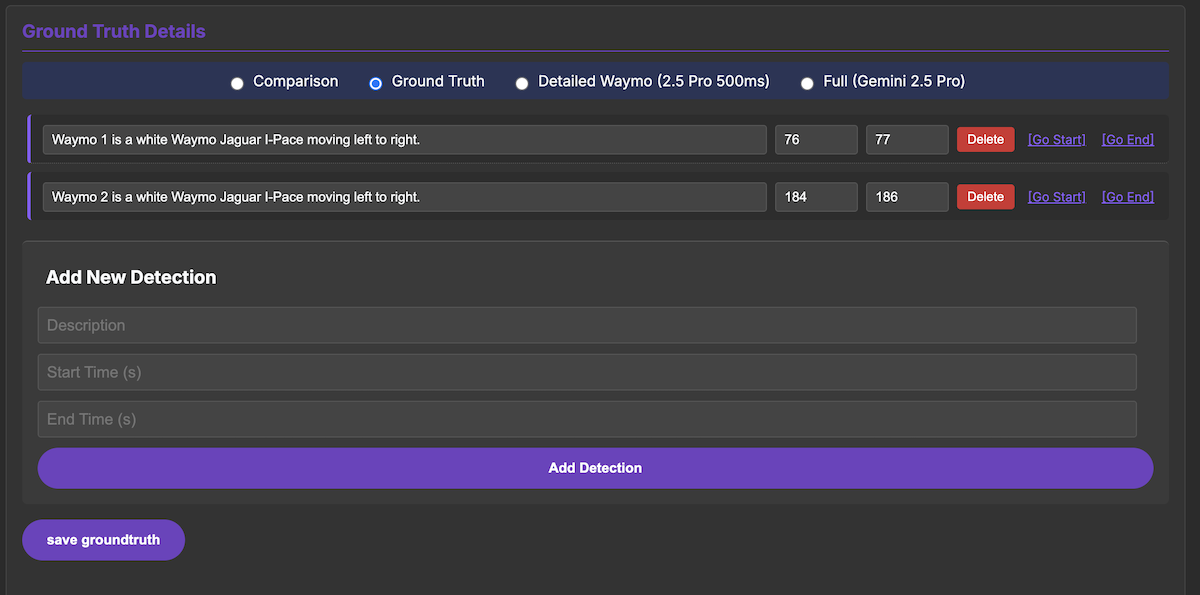

After meeting Mat Velloso at Google and sharing some details about the project, I decided to adopt a more methodical approach to evaluating the results, especially given the increasing emphasis on the importance of rigorous evaluations in the field.

To establish a baseline for comparison, I annotated one day's worth of video footage to create ground truth data. This process, while somewhat cumbersome, was facilitated by a custom-built annotation tool:

With the ground truth data prepared, I ran evaluations using a simplified script focused solely on counting Waymo vehicles, rather than analyzing all cars. For these evaluations, a detection was considered correct if its timestamp was within a 5-second window of the actual vehicle appearance in the video.

| Model Name | Model Code | Precision | Recall | F1 Score | TP | FP | FN | GT Positives | Pred. Positives |

|---|---|---|---|---|---|---|---|---|---|

| Waymo (Gemini 2.5 Pro) | waymo | 0.8072 | 0.9633 | 0.8784 | 473 | 113 | 18 | 491 | 586 |

| Full (Gemini 2.5 Pro) | base | 0.7945 | 0.8187 | 0.8064 | 402 | 104 | 89 | 491 | 506 |

| Waymo Flash (Gemini 2.5 Flash) | waymo_flash | 0.7628 | 0.7271 | 0.7445 | 357 | 111 | 134 | 491 | 468 |

| Flash (Gemini 2.5 Flash) | flash | 0.6612 | 0.6599 | 0.6605 | 324 | 166 | 167 | 491 | 490 |

Initially, even without specific tuning, the analysis achieved an F1 Score (https://en.wikipedia.org/wiki/F-score) of 0.87, indicating a decent level of accuracy for a straightforward prompt. This result reinforced the importance of the F1 score as a key optimization metric (a principle impressed on me by my beloved Geo Data Science team - thanks Adi, Hannes & co). However, reviewing the results revealed some funky anomalies. For instance, a Waymo vehicle was incorrectly detected at 118 seconds in one video, where no such vehicle was present. Upon closer inspection, a Waymo did appear at 1 minute and 18 seconds (1:18). It appeared the model misinterpreted the prompt's request for timestamps in seconds and mistakenly converted "1:18" directly to "118" seconds. Correcting this involved a simple prompt change: I modified it to request timestamps in MM:SS format instead of total seconds. This single adjustment improved the F1 score by approximately 2%, rising from 0.8784 to 0.8990.

Manually splitting the video

With those results I was encouraged to try something else - manually splitting the video. OpenAI has a good cookbook on working with videos in their API (contrary to Google they don't have native Video in support) So i decided to use ffmpeg to split the video in frames and manually add a timestamp to each frame and try that. This gave me the chance to also try OpenAI API - with 4.1 and o4-mini which both are very good in vision.

Manually splitting led to a major uplift in scores.

| Model Name | Model Code | Precision | Recall | F1 Score | TP | FP | FN | GT Positives | Pred. Positives |

|---|---|---|---|---|---|---|---|---|---|

| Waymo (Gemini 2.5 Pro 500ms) | waymo_500ms | 0.8571 | 0.9898 | 0.9187 | 486 | 81 | 5 | 491 | 567 |

| Timestamp (Gemini 2.5 Pro) | timestamp | 0.8307 | 0.9796 | 0.8990 | 481 | 98 | 10 | 491 | 579 |

| Waymo (GPT 4.1 700ms) | openai_waymo_700ms | 0.9050 | 0.8534 | 0.8784 | 419 | 44 | 72 | 491 | 463 |

| Waymo (OpenAI GPT-4.1) | openai_waymo | 0.9251 | 0.8045 | 0.8606 | 395 | 32 | 96 | 491 | 427 |

| Waymo (OpenAI o4-mini) | o4mini_waymo | 0.8838 | 0.6660 | 0.7596 | 327 | 43 | 164 | 491 | 370 |

This manual frame splitting approach yielded an additional 2% improvement in the F1 score over the previous timestamp-based method, bringing it to 0.9187 from 0.8990. This gain coincided with an approximate doubling of token usage, likely attributable to the finer granularity (processing frames at 500ms intervals versus the estimated 1-second intervals of Google's native video processing).

An attempt to replicate this with the OpenAI API (using GPT-4.1) encountered technical hurdles. Apparent API limitations on the number of images per request (around 400 frames) and subsequent connection errors prevented the use of 500ms splits. Therefore, a 700ms interval was used for the OpenAI analysis instead.

Despite this limitation, both platforms produced robust results. Considering Gemini offered comparable performance at roughly half the cost, it was chosen for the subsequent steps, though OpenAI clearly presents a capable alternative.

Better instructions in Video

The final tweak I did was add a few more instructions - that helps prevent common errors which I've seen - and this led to a surprisingly large bump in accuracy.

Specifically I added those clarifications to the prompt:

This video is taken at Pine Street in San Francisco.

It is a one way street, and all cars are driving in the same direction (in this video from right to left).

Without traffic it takes a car ~2 seconds to drive through the entire video (with traffic it can take longer).

## Common issues to be aware in your analysis

- Sometimes two Waymo vehicles are following each other closely, and are therefore recognized as a single vehicle by the system.

- Often some other white cars are mistaken for Waymo vehicles.

- Other vehicles with Lidar sensors are also recognized as Waymo vehicles (but are actually not Waymo vehicles)

- Not every video has any Waymo vehicles in motion. There are videos where only one Waymo vehicle is in motion, and sometimes none.

Final leaderboard

| Model Name | Model Code | Precision | Recall | F1 Score | TP | FP | FN | GT Positives | Pred. Positives |

|---|---|---|---|---|---|---|---|---|---|

| Detailed Waymo (2.5 Pro 500ms) | detail_waymo_500ms | 0.9280 | 0.9980 | 0.9617 | 490 | 38 | 1 | 491 | 528 |

| Waymo (Gemini 2.5 Pro 500ms) | waymo_500ms | 0.8571 | 0.9898 | 0.9187 | 486 | 81 | 5 | 491 | 567 |

| Timestamp (Gemini 2.5 Pro) | timestamp | 0.8307 | 0.9796 | 0.8990 | 481 | 98 | 10 | 491 | 579 |

| Waymo (Gemini 2.5 Pro) | waymo | 0.8072 | 0.9633 | 0.8784 | 473 | 113 | 18 | 491 | 586 |

| Waymo (GPT 4.1 700ms) | openai_waymo_700ms | 0.9050 | 0.8534 | 0.8784 | 419 | 44 | 72 | 491 | 463 |

| Waymo (OpenAI GPT-4.1) | openai_waymo | 0.9251 | 0.8045 | 0.8606 | 395 | 32 | 96 | 491 | 427 |

| Full (Gemini 2.5 Pro) | base | 0.7945 | 0.8187 | 0.8064 | 402 | 104 | 89 | 491 | 506 |

| Waymo (OpenAI o4-mini) | o4mini_waymo | 0.8838 | 0.6660 | 0.7596 | 327 | 43 | 164 | 491 | 370 |

| Waymo Flash (Gemini 2.5 Flash) | waymo_flash | 0.7628 | 0.7271 | 0.7445 | 357 | 111 | 134 | 491 | 468 |

| Flash (Gemini 2.5 Flash) | flash | 0.6612 | 0.6599 | 0.6605 | 324 | 166 | 167 | 491 | 490 |

As you can see, these simple clarifications boosted the F1 score to 0.9617. This level of accuracy from a standard LLM detection method is impressive and provides a solid baseline for experiments.

While I stopped optimizing at this point, I'm confident further gains are possible. For instance, adding a verification step (e.g., "You detected a Waymo car at this timestamp; is this correct?") could likely improve the F1 score by another 2-3%.

Implications:

- This LLM-based approach offers a significantly faster and more cost-effective way to prototype video analytics compared to traditional custom-trained models. Development time can shrink from weeks to hours, accelerating time-to-impact by 10-100x.

- Current analysis costs using Gemini 2.5 Pro are around $1.30 per hour. Based on the trajectory of AI model progress, I expect this to drop below $0.10 within a year and potentially under $0.01 within two years. Costs could be reduced further even now through optimizations like lowering the recording frame rate (e.g., from 30 FPS to 5 FPS). This would allow substantial cost savings without losing essential details.

- While custom models will still likely offer superior performance for scaled applications (and make sense once a use case is validated), this LLM method is ideal for quickly establishing product-market fit for video analytics ideas before committing to extensive development efforts.

Thank you

This was a fun hobby project, I encourage you to play with it and give me feedback. Special thanks to Mat Velloso for the fun chat and lunch at Google, and Alessio Fanelli for the inspiration to dive into video analysis.

If you have any questions, feel free to reach out below.